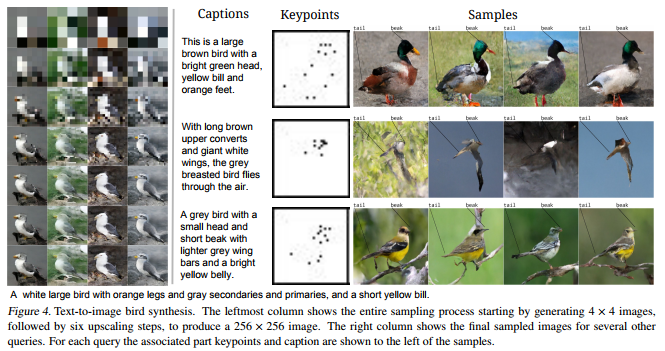

A while ago, I blogged about this new algorithm, pix2code, which takes in pictures of graphical user interfaces and outputs the underlying code. Today, I discovered another fantastic algorithm, by Scott Reed and his colleagues at Google Deepmind. txt2pix would be a catchy name for this algorithm, as it can take in a fairly complex sentence (e.g., “a grey bird with a black head, orange eyes, and a yellow beak“) and generate a completely new and unique image based on its content. In their recently published paper, they elaborate on the algorithms inner workings.

Scott and his team have been working on this project for quite some time. The early version of the algorithm generated an image one pixel at a time, but it had difficulties generating large or high-quality images. After picking a starting pixel to generate, any consecutively generated pixel the algorithm generates needs to align with its neighbours. For example, if pixel A is the first pixel in the generation of the yellow beak of a bird, any pixels that are created in the neighbourhood of that pixel should take into account that pixel A is trying to visualize a yellow beak, and behave accordingly: either continuing the beak, or ending the beak and starting on another element of the image.

The problem with such an iterative approach (i.e., pixel by pixel) is that it can take a very long time for a computer to generate an image. Considering that a fairly small image, say 256 by 256 pixels, already contains 65.536 pixels, each of which needs to be generated while considering all its neighbours and keeping in mind the bigger picture. In the most recent, updated version of the algorithm, Scott and his team have allowed the generation of multiple unrelated pixels simultaneously at different ‘zones’ of the image. Hence the Parallel in Parallel Multiscale Autoregressive Density Estimation. With this parallel approach, the algorithm can now generate the pixels representing the yellow beak in one area of the image, while simultaneously generating pixels for the bird’s wings and the branch it’s sitting on at different sections of the image. This speeds up the process quite extensively, demanding less computation time, thus allowing for quicker image generation.

I can definitely recommend that you check out Scott Reeds’ twitter feed for some amazing animated GIFs of the generation process:

Sampling animations for Parallel Multiscale Autoregressive Density Estimation. pic.twitter.com/rNabVgzPGa

— Scott Reed (@scott_e_reed) 13 maart 2017

Some more animations: pic.twitter.com/EptRR6iIZ4

— Scott Reed (@scott_e_reed) 13 maart 2017

One more animation: pic.twitter.com/fdssYx18PY

— Scott Reed (@scott_e_reed) 13 maart 2017

If you want to know more details behind the algorithm but do not fancy reading the entire paper, I recommend this short explanation video by Károly Zsolnai-Fehér (what a name!) of Two Minute papers: