R users have been using the twitter package by Geoff Jentry to mine tweets for several years now. However, a recent blog suggests a novel package provides a better mining tool: rtweet by Michael Kearney (GitHub).

Both packages use a similar setup and require you to do some prep-work by creating a Twitter “app” (see the package instructions). However, rtweet will save you considerable API-time and post-API munging time. This is demonstrated by the examples below, where Twitter is searched for #rstats-tagged tweets, first using twitteR, then using rtweet.

library(twitteR)

# this relies on you setting up an app in apps.twitter.com

setup_twitter_oauth(

consumer_key = Sys.getenv("TWITTER_CONSUMER_KEY"),

consumer_secret = Sys.getenv("TWITTER_CONSUMER_SECRET")

)

r_folks <- searchTwitter("#rstats", n=300)

str(r_folks, 1)

## List of 300

## $ :Reference class 'status' [package "twitteR"] with 17 fields

## ..and 53 methods, of which 39 are possibly relevant

## $ :Reference class 'status' [package "twitteR"] with 17 fields

## ..and 53 methods, of which 39 are possibly relevant

## $ :Reference class 'status' [package "twitteR"] with 17 fields

## ..and 53 methods, of which 39 are possibly relevant

str(r_folks[1])

## List of 1

## $ :Reference class 'status' [package "twitteR"] with 17 fields

## ..$ text : chr "RT @historying: Wow. This is an enormously helpful tutorial by @vivalosburros for anyone interested in mapping "| __truncated__

## ..$ favorited : logi FALSE

## ..$ favoriteCount: num 0

## ..$ replyToSN : chr(0)

## ..$ created : POSIXct[1:1], format: "2017-10-22 17:18:31"

## ..$ truncated : logi FALSE

## ..$ replyToSID : chr(0)

## ..$ id : chr "922150185916157952"

## ..$ replyToUID : chr(0)

## ..$ statusSource : chr "Twitter for Android"

## ..$ screenName : chr "jasonrhody"

## ..$ retweetCount : num 3

## ..$ isRetweet : logi TRUE

## ..$ retweeted : logi FALSE

## ..$ longitude : chr(0)

## ..$ latitude : chr(0)

## ..$ urls :'data.frame': 0 obs. of 4 variables:

## .. ..$ url : chr(0)

## .. ..$ expanded_url: chr(0)

## .. ..$ dispaly_url : chr(0)

## .. ..$ indices : num(0)

## ..and 53 methods, of which 39 are possibly relevant:

## .. getCreated, getFavoriteCount, getFavorited, getId, getIsRetweet, getLatitude, getLongitude, getReplyToSID,

## .. getReplyToSN, getReplyToUID, getRetweetCount, getRetweeted, getRetweeters, getRetweets, getScreenName,

## .. getStatusSource, getText, getTruncated, getUrls, initialize, setCreated, setFavoriteCount, setFavorited, setId,

## .. setIsRetweet, setLatitude, setLongitude, setReplyToSID, setReplyToSN, setReplyToUID, setRetweetCount,

## .. setRetweeted, setScreenName, setStatusSource, setText, setTruncated, setUrls, toDataFrame, toDataFrame#twitterObjThe above operations required only several seconds to completely. The returned data is definitely usable, but not in the most handy format: the package models the Twitter API on to custom R objects. It’s elegant, but also likely overkill for most operations. Here’s the rtweet version:

library(rtweet)

# this relies on you setting up an app in apps.twitter.com

create_token(

app = Sys.getenv("TWITTER_APP"),

consumer_key = Sys.getenv("TWITTER_CONSUMER_KEY"),

consumer_secret = Sys.getenv("TWITTER_CONSUMER_SECRET")

) -> twitter_token

saveRDS(twitter_token, "~/.rtweet-oauth.rds")

# ideally put this in ~/.Renviron

Sys.setenv(TWITTER_PAT=path.expand("~/.rtweet-oauth.rds"))

rtweet_folks <- search_tweets("#rstats", n=300)

dplyr::glimpse(rtweet_folks)

## Observations: 300

## Variables: 35

## $ screen_name "AndySugs", "jsbreker", "__rahulgupta__", "AndySugs", "jasonrhody", "sibanjan...

## $ user_id "230403822", "703927710", "752359265394909184", "230403822", "14184263", "863...

## $ created_at 2017-10-22 17:23:13, 2017-10-22 17:19:48, 2017-10-22 17:19:39, 2017-10-22 17...

## $ status_id "922151366767906819", "922150507745079297", "922150470382125057", "9221504090...

## $ text "RT: (Rbloggers)Markets Performance after Election: Day 239 https://t.co/D1...

## $ retweet_count 0, 0, 9, 0, 3, 1, 1, 57, 57, 103, 10, 10, 0, 0, 0, 34, 0, 0, 642, 34, 1, 1, 1...

## $ favorite_count 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0,...

## $ is_quote_status FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, ...

## $ quote_status_id NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ is_retweet FALSE, FALSE, TRUE, FALSE, TRUE, TRUE, FALSE, TRUE, TRUE, TRUE, TRUE, TRUE, F...

## $ retweet_status_id NA, NA, "922085241493360642", NA, "921782329936408576", "922149318550843393",...

## $ in_reply_to_status_status_id NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ in_reply_to_status_user_id NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ in_reply_to_status_screen_name NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ lang "en", "en", "en", "en", "en", "en", "en", "en", "en", "en", "en", "en", "ro",...

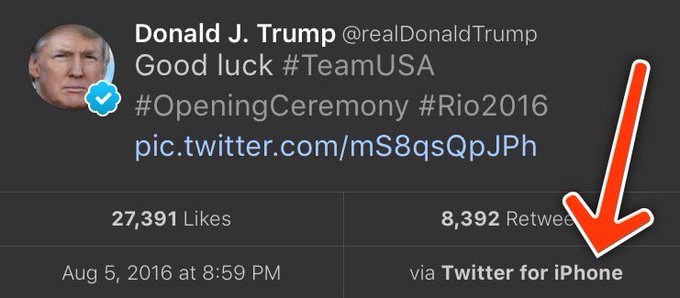

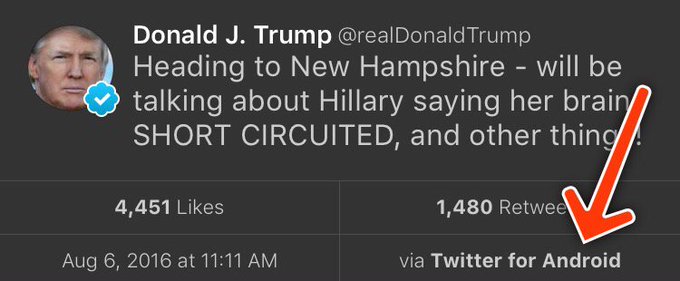

## $ source "IFTTT", "Twitter for iPhone", "GaggleAMP", "IFTTT", "Twitter for Android", "...

## $ media_id NA, "922150500237062144", NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, "92...

## $ media_url NA, "http://pbs.twimg.com/media/DMwi_oQUMAAdx5A.jpg", NA, NA, NA, NA, NA, NA,...

## $ media_url_expanded NA, "https://twitter.com/jsbreker/status/922150507745079297/photo/1", NA, NA,...

## $ urls NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ urls_display "ift.tt/2xe1xrR", NA, NA, "ift.tt/2xe1xrR", NA, "bit.ly/2yAAL0M", "bit.ly/2yA...

## $ urls_expanded "http://ift.tt/2xe1xrR", NA, NA, "http://ift.tt/2xe1xrR", NA, "http://bit.ly/...

## $ mentions_screen_name NA, NA, "DataRobot", NA, "historying vivalosburros", "NoorDinTech ikashnitsky...

## $ mentions_user_id NA, NA, "622519917", NA, "18521423 304837258", "2511247075 739773414316118017...

## $ symbols NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ hashtags "rstats DataScience", "Rstats ACSmtg", "rstats", "rstats DataScience", "rstat...

## $ coordinates NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ place_id NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ place_type NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ place_name NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ place_full_name NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ country_code NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ country NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ bounding_box_coordinates NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...

## $ bounding_box_type NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N...This operation took equal to less time but provides the data in a tidy, immediately usable structure.

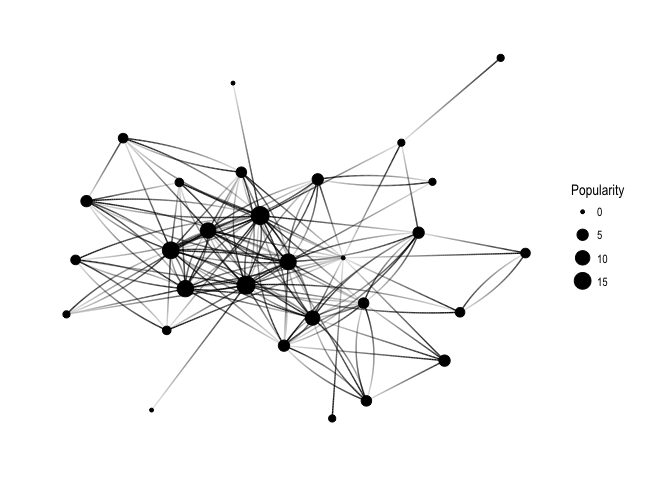

On the rtweet website, you can read about the additional functionalities this new package provides. For instance,ts_plot() provides a quick visual of the frequency of tweets. It’s possible to aggregate by the minute, i.e., by = "mins", or by some value of seconds, e.g.,by = "15 secs".

## Plot time series of all tweets aggregated by second

ts_plot(rt, by = "secs")

ts_filter() creates a time series-like data structure, which consists of “time” (specific interval of time determined via the by argument), “freq” (the number of observations, or tweets, that fall within the corresponding interval of time), and “filter” (a label representing the filtering rule used to subset the data). If no filter is provided, the returned data object includes a “filter” variable, but all of the entries will be blank "", indicating that no filter filter was used. Otherwise, ts_filter() uses the regular expressions supplied to the filter argument as values for the filter variable. To make the filter labels pretty, users may also provide a character vector using the key parameter.

## plot multiple time series by first filtering the data using

## regular expressions on the tweet "text" variable

rt %>%

dplyr::group_by(screen_name) %>%

## The pipe operator allows you to combine this with ts_plot

## without things getting too messy.

ts_plot() +

ggplot2::labs(

title = "Tweets during election day for the 2016 U.S. election",

subtitle = "Tweets collected, parsed, and plotted using `rtweet`"

)The developer cautions that these plots often resemble frowny faces: the first and last points appear significantly lower than the rest. This is caused by the first and last intervals of time to be artificially shrunken by connection and disconnection processes. To remedy this, users may specify trim = TRUE to drop the first and last observation for each time series.

Give rtweet a try and let me know whether you prefer it over twitter.