Did you know that dragonflies are one of the most effective and accurate predators alive? And that while it has a brain consisting of very few neurons. Neuroscientist Greg Gage and his colleagues studied how a dragonfly locks onto its preys and captures it within milliseconds. Actually, a dragonfly seems to be little more than a small neural network hooked up to some wings, and optimized through millions of years of evolution.

Tag: youtube

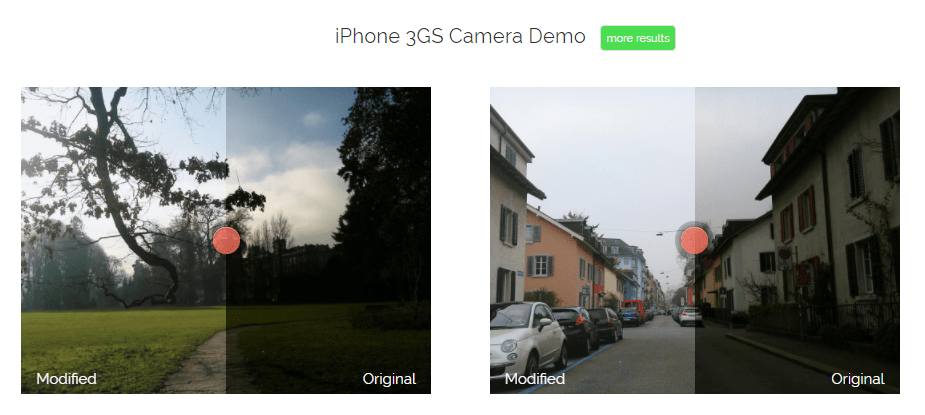

Super Resolution: A Photo Enhancer AI

In the video below, one of my favorite YouTube channels (Two Minute Papers) discusses a new super resolution project where academic scholars taught a neural network to improve low quality photo’s. The researchers took the same picture with multiple camera’s of varying quality and allowed a neural network to learn how the lowest quality pictures can be adjusted to more closely resemble their high quality counterparts. A very interesting approach and the results are just mind-boggling:

The scholars were nice enough to not only publish the paper open access, but also to open source the data. You can download a 125 Mb sample here or the original full 64 GB dataset here.

The wondrous state of Computer Vision, and what the algorithms actually “see”

The field of computer vision tries to replicate our human visual capabilities, allowing computers to perceive their environment in a same way as you and I do. The recent breakthroughs in this field are super exciting and I couldn’t but share them with you.

In the TED talk below by Joseph Redmon (PhD at the University of Washington) showcases the latest progressions in computer vision resulting, among others, from his open-source research on Darknet – neural network applications in C. Most impressive is the insane speed with which contemporary algorithms are able to classify objects. Joseph demonstrates this by detecting all kinds of random stuff practically in real-time on his phone! Moreover, you’ve got to love how well the system works: even the ties worn in the audience are classified correctly!

PS. please have a look at Joseph’s amazing My Little Pony-themed resumé.

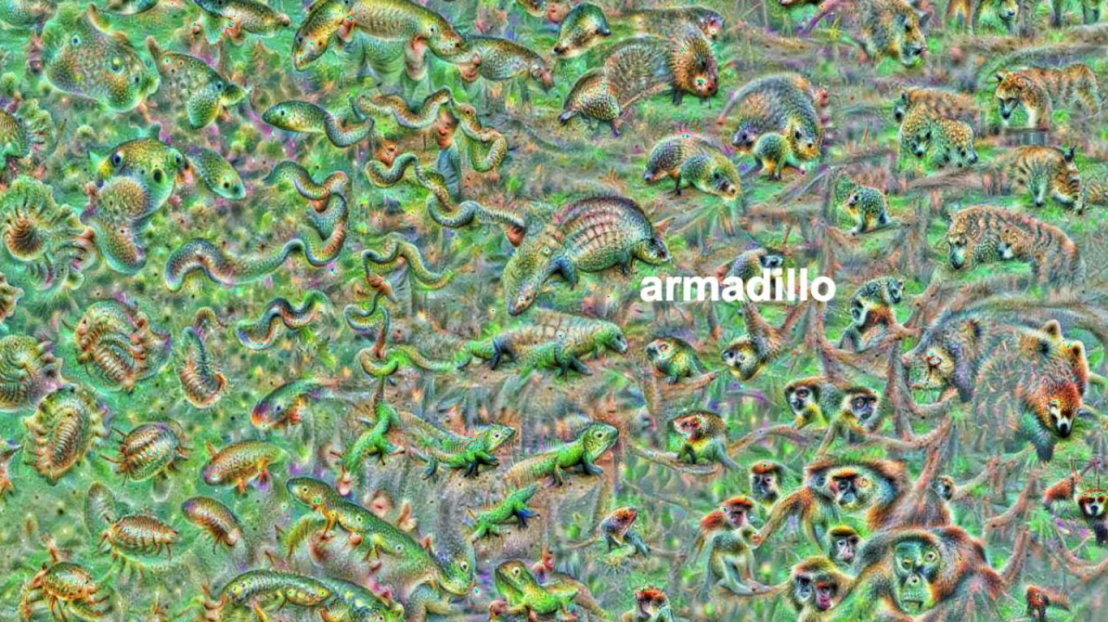

The second talk, below, is more scientific and maybe even a bit dry at the start. Blaise Aguera y Arcas (engineer at Google) starts with a historic overview brain research but, fortunately, this serves a cause, as ~6 minutes in Blaise provides one of the best explanations I have yet heard of how a neural network processes images and learns to perceive and classify the underlying patterns. Blaise continues with a similarly great explanation of how this process can be reversed to generate weird, Asher-like images, one could consider creative art:

0_o pic.twitter.com/M3yB6rzTiO

— Andrej Karpathy (@karpathy) 28 oktober 2017

Blaise’s presentaton you can find here:

If you want to learn more about this process of image synthesis through deep learning, I can recommend the scientific papers discussed by one of my favorite Youtube-channels, Two-Minute Papers. Karoly’s videos, such as the ones below, discuss many of the latest developments:

Let me know if you have any other video’s, papers, or materials you think are worthwhile!

Cryptocurrency and Blockchain explained by 3Blue1Brown

Grant Sanderson is the owner of YouTube channel 3Blue1Brown, which aims to explain math and stats concepts in an entertaining way. Using animations, Grant grasps difficult problems and explains them in understandable language. I was already familiar with the great explanatory videos on Linear Algebra and Neural Networks, but this new video on cryptocurrencies and blockchain (below) is definitely one of the best explanations of Bitcoin I’ve seen so far:

GAN: Generative Adversarial Networks

A Generative Adversarial Network, GAN in short, is a machine learning architecture where two neural networks compete against each other. One of them functions as a discriminator, seeking to optimize its classification of data (i.e., determine whether or not there is a cat in a picture). The other one functions as a generator, seeking to best generate new data to fool the discriminator (i.e., create realistic fake images of cats). Over time, the generator network will become increasingly good at simulating realistic data and being able to mimic real-life.

The concept of GAN was introduced by Ian Goodfellow in 2014, whom we know from the Machine Learning & Deep Learning book. Although GANs are computationally heavy and still undergoing major development, their potential implications are widespread. We can see these architectures taking over all sort of creative work, where generating new “data” is the main task. Think for instance of designing clothes, creating video footage, writing novels, animating movies, or even whole video games. One of my favorite Youtube channels discusses multiple of its recent applications, and here are a few of my favorites:

- App-building AI: pix2code

- Generating Images from Scratch: text2pix

- Image Synthesis from Text

- Image Editing with GAN

- AI learns to synthesize animal pictures

- AI makes drawing of your photo: pix2pix

If you want to know more about GANs, Analytics Vidhya hosts a short introduction, but I personally prefer this one by Rob Miles via Computerphile:

If you want to try out these GANs yourself but do not have the programming experience: Reiichiro Nakano made a GAN playground in (what seems) JavaScript, where you can play around with the discriminator and the generator to create an adversarial network that identifies and generates images of numbers.

Neural Networks 101

Last month, a video by 3Blue1Brown has been trending on YouTube, accumulating already over a quarter of a million views. It only lasts 10 minutes but provides a very good and intuitive explanation of the inner workings of Neural Networks (NN):

The Machine Learning & Deep Learning book I wrote about recently provides a more substantial explanation of the different NNs and their inner workings. Neural nets come in various different flavors and my list of Data Science, Machine Learning, & Statistics Resources includes useful cheatsheets and other information, such as the architecture map below.

If you still haven’t had enough, Daniel Shiffman demonstrates how to code Neural Networks in Processing (Java), and the video displays precisely what happens behind the scenes. Finally, MIT has made their AI course material open-source, and it includes two 45 minute lectures on NNs. The lecturing professor – Patrick Winston – isn’t much of a fan of these “bulldozer” algorithms. He has a stronger preference for “more sophisticated” mathematical learning through, for instance, Support Vector Machines.