Version control is an essential tool for any software developer. Hence, any respectable data scientist has to make sure his/her analysis programs and machine learning pipelines are reproducible and maintainable through version control.

Often, we use git for version control. If you don’t know what git is yet, I advise you begin here. If you work in R, start here and here. If you work in Python, start here.

This blog is intended for those already familiar working with git, but who want to learn how to write better, more informative git commit messages. Actually, this blog is just a summary fragment of this original blog by Chris Beams, which I thought deserved a wider audience.

Chris’ 7 rules of great Git commit messaging

- Separate subject from body with a blank line

- Limit the subject line to 50 characters

- Capitalize the subject line

- Do not end the subject line with a period

- Use the imperative mood in the subject line

- Wrap the body at 72 characters

- Use the body to explain what and why vs. how

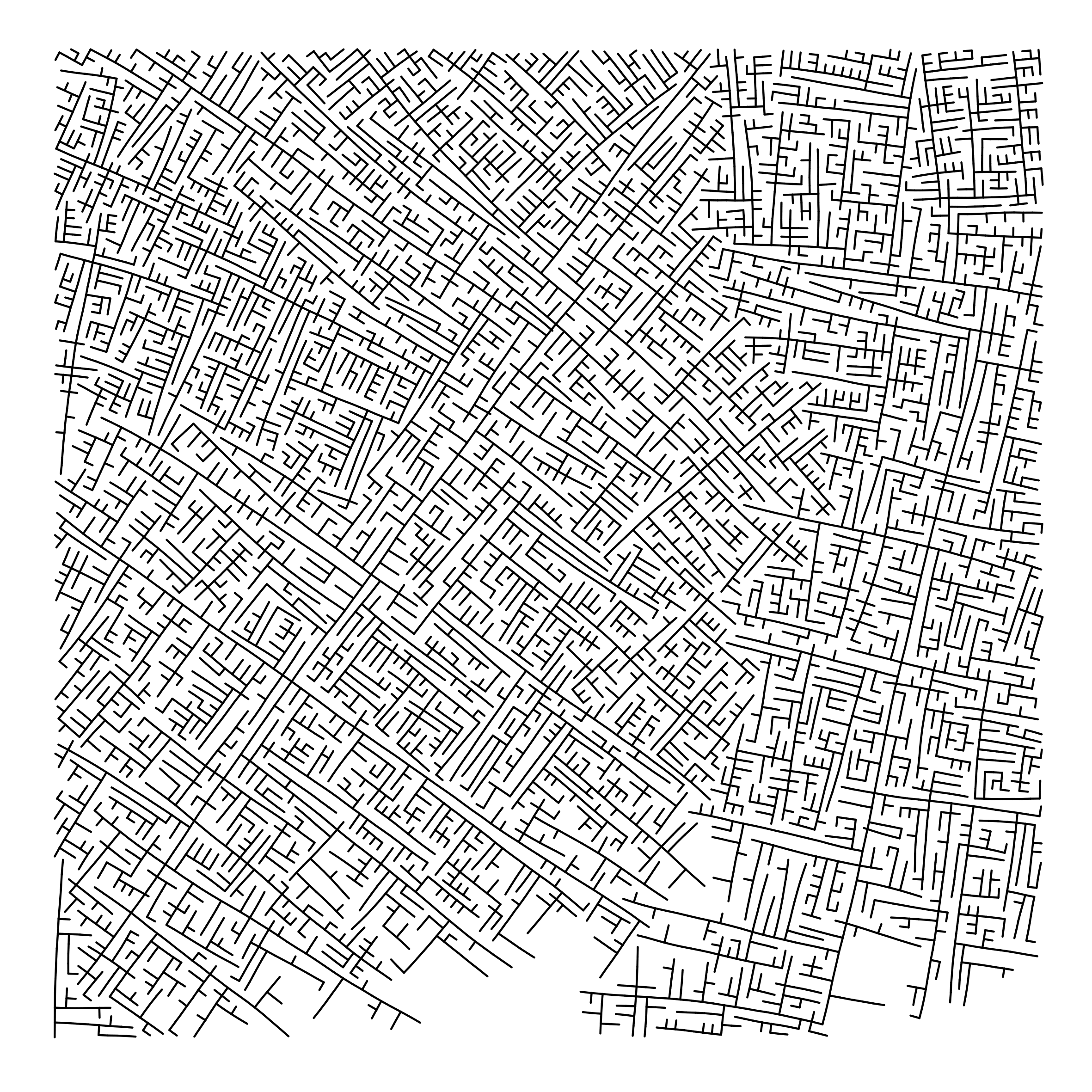

For example:

Summarize changes in around 50 characters or less

More detailed explanatory text, if necessary. Wrap it to about 72

characters or so. In some contexts, the first line is treated as the

subject of the commit and the rest of the text as the body. The

blank line separating the summary from the body is critical (unless

you omit the body entirely); various tools like `log`, `shortlog`

and `rebase` can get confused if you run the two together.

Explain the problem that this commit is solving. Focus on why you

are making this change as opposed to how (the code explains that).

Are there side effects or other unintuitive consequences of this

change? Here's the place to explain them.

Further paragraphs come after blank lines.

- Bullet points are okay, too

- Typically a hyphen or asterisk is used for the bullet, preceded

by a single space, with blank lines in between, but conventions

vary here

If you use an issue tracker, put references to them at the bottom,

like this:

Resolves: #123

See also: #456, #789

If you’re having a hard time summarizing your commits in a single line or message, you might be committing too many changes at once. Instead, you should try to aim for what’s called atomic commits.

Cover image by XKCD#1296