I have played my fair share of Wordle.

I’m not necessarily good at it, but most days I get to solve the puzzle.

The experience is completely different with Semantle — a Wordle-inspired puzzle in which you also need to guess the word of the day.

Unlike in Wordle, Semantle gives you unlimited guesses though. And, boy, you will need many!

Like Wordle, Semantle gives you hints as to how close your guesses were to the secret word of the day.

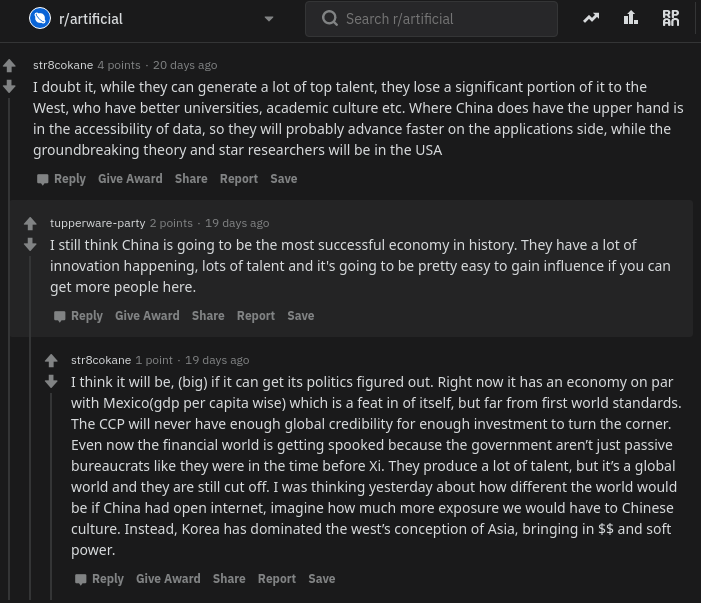

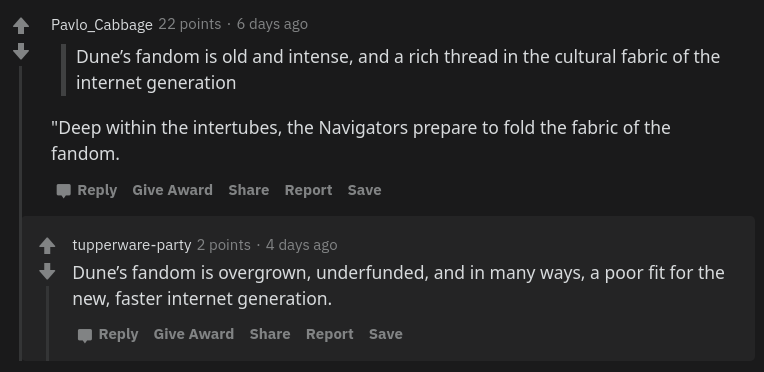

However, where Wordle shows you how good your guesses were in terms of the letters used, Semantle evaluates the semantic similarity of your guesses to the secret word. For the 1000 most similar words to the secret word, it will show you its closeness like in the picture above.

This semantic similarity comes from the domain of Natural Language Processing — NLP — and this basically reflects how often words are used in similar contexts in natural language.

For instance, the words “love” and “hate” may seem like opposites, but they will often score similarly in grammatical sentences. According to the semantle FAQ the actual opposite of “love” is probably something like “Arizona Diamondbacks”, or “carburetor”.

Another example is last day’s solution (15 March 2022), when the secret word was circle. The ten closest words you could have guessed include circles and semicircle, but more distinctive words such as corner and clockwise.

Further downfield you could have guessed relatively close words like saucer, dot, parabola, but I would not have expected words like outwaited, weaved, and zipped.

The creator of Semantle scored the semantic similarity for almost all words used in the English language, by training a so-called word2vec model based on a very large dataset of news articles (GoogleNews-vectors-negative300.bin from late 2021).

Now, every day, one word is randomly selected as the secret word, and you can try to guess which one it is. I usually give up after 300 to 400 guesses, but my record was 76 guesses for uncovering the secret word world.

Try it out yourself: https://semantle.novalis.org/

And do share your epic wins and fails!