Below are a dozen of very specific R tips and tricks. Some are valuable, useful, or boost your productivity. Others are just geeky funny.

More general helpful R packages and resources can be found in this list.

If you have additions, please comment below or contact me!

Completely new to R? → Start here!

Table of Contents

- RStudio tricks

- General tips

- Base R tricks

- R Markdown tricks

- Data manipulation tricks

- Data visualization tricks

RStudio

- RStudio Addins

- RStudio Keyboard Shortcuts

- R Studio easy tricks: tearable panes, command history, renaming in scope, outlining, snippets, and more

- Working with R projects and

here - Working with code snippets

- Working with code snippets (video)

- Stop RStudio from asking to save workspace

- Automatically save workspace in case of a crash / errors

- Edit several lines of code at once

- Press

ALT + left mousebuttonto select and write on multiple lines simultaneously. - Press

ALT + -to insert a<-operator - Press

CTRL + SHIFT + Mto insert a%>%operator - Press

CTRL + SHIFT + Fto search all files in the directory or project - Press

CTRL + UPto access navigate your console history - Rename all variables with same name (rename in scope)

- Press

CMD + ALT + SHIFT + Mto rename variable within scope: to rename all/multiple occurrences of a variable in a script - Press

TABinside “” (quotation marks / an empty string) to select a filename from your current directory, or to autocomplete a filename you started typing

Many more shortkeys available here online, and in your RStudio under Tools → Keyboard Shortcuts Help.

General

- 6-step guide to learning R

- Change your default library location

- Copy any data straight into R objects with

datapasta - Copy excel data straight into R dataframes

- Read in observational data split over multiple rows

- Send documents to Google Drive using R

- Use

corrrto simplify working with correlations - Use

prettycodeto print highighted console output - Five ways to standardize column names to

snake_caseformat - Make screenshots with

magick::image_read('screenshot:') - Make regular expression easy with the

RVerbalExpressionpackage

Disclaimer: This page contains one or more links to Amazon.

Any purchases made through those links provide us with a small commission that helps to host this blog.

Useful base functions

str()– explore structure of R objecttrimws()– trim trailing and/or leading whitespacesdput()– dump an R object in form of R codecut()– categorize values into intervalsintersect()– returns similar elements in two vectorsunion()– find intersecting items in two vectorssetdiff()– returns different elements in two vectorsinteraction()– computes a factor which represents the interaction of the given factorsformatC()can be used to round numbers and force trailing zero’sformatC()andsprintf()can be used to add leading/trailing charactersexpand.grid()– create a data frame from all combinations of the supplied vectors or factorsseq_along(myvec)– generates a vector of 1:length(myvec)- Initiate an empty dataframe with header names

- Functional programming tricks:

switch()can replace elaborate ifelse statements (see also)match.arg()can check for arguments and values- The null-default operator (

%||%) returns the first value that is notNULL

- Convert a vector of strings to title case

- Quickly map a new set of values to an existing vector

- Calculate the derivative of a function expression

- Specify

options()in your script:- Prevent automatic factor columns using

options(stringsAsFactors = FALSE) - Use

options(width = 60)to change the default width of console output - Use

options(max.print = 100)to change the default number of values printed in the console

- Prevent automatic factor columns using

R Markdown

- Pimp my RMD: Overview of many R markdown tricks by Yan Holtz

- Save compiled images in folder with markdown

- Add caption to compiled tables with markdown

- Tabsets in markdown

- Foldable html content in markdown

- Reuse code chunks in markdown

- Generate Word documents with markdown

- Open url’s in a new window with

[text](url){target = "_blank}in markdown - Use

#<<to highlight code - Move to next

xaringanslide upon click (or Enter) - Convert an R Markdown file (.Rmd) into an R script (.R) with

knitr::purl(input, output, documentation = 2) - Use

CTRL + SHIFT + 1:4to zoom in on any single of your RStudio panels. UseALT + CTRL + SHIFT + 0to zoom back out. knitr::read_chunk("your_script_name.R")can be used to source in scripts that reside outside your current markdown file- Use animations in your markdown files with the

gganimatepackage and"header-includes: - \usepackage{animate}in your YAML preamble - Create a searchable, sortable HTML table in 1 line of code with

DT::datatable(mydf, filter = 'top')

Data manipulation

readr::parse_numberextracts the numbers from raw / scraped textstringr::str_padcan be used to add leading or trailing characters (like zero’s)dplyrtricksdplyr::case_whenreplaces elaborate ifelse statements (Video)dplyr::everythingin combination withdplyr::selectto reorder columns- Quickly count / tally observations within groups with

dplyr::count,dplyr::tally, anddplyr::add_countanddplyr::add_tally - Quickly filter the top categories / groups based on a variable with

forcats::fct_lump - Apply the same filter to multiple columns with

dplyr::filter_allordplyr::filter_ifin combination withdplyr::all_varsanddplyr::any_vars dplyr::group_by_ifperforms quick conditional grouping- Perform rowwise mutations / calculations using

dplyr::rowwise purrrtrickspurrr::map_dfto read in and merge all data files in a folder- Combine

purr::map_dfandfs::dir_lsto read in and merge all data files following a specific pattern in a folder - Combine

list.filesandpurrr::map_dfto read in and merge all data files in a folder broom::tidyputs your model results in a tidy data frame- Simpler correlation analysis with

corrr df %>% .$column_nameordf %$% column_namecan retrieve a column from a tibbledplyr::coalescefinds the one value contained in many columns with missing values- Display a fraction between 0 and 1 as a percentage with

scales::percent(myfraction) - Convert numbers that came in as strings with commas to R numbers with

readr::parse_number(mydf$mycol)

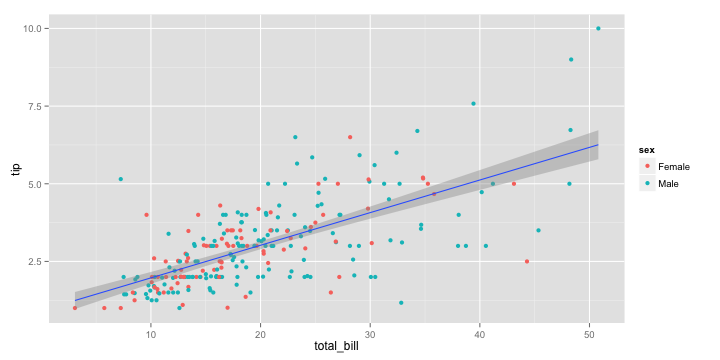

Data visualization

colors()to see the names of all built-in colorsGGally::ggpairsfor beautiful pair-wise correlation plotstidyr::completeto get barplot spacing right- Quickly visualize your whole dataset

- Create custom, corporate, reproducible color palettes and custom discrete color scales

- Standardize the colors of groups in your visualizations using named vectors

theme_setto set a default ggplot2 theme- Create your own ggplot2 theme:

- Rearranging values and axis within ggplot2 facets

- Add line labels at the end of geom_lines by Simon Jackson

- Add

+ NULLto the end of your ggplot2 chain during development - Add

clip = "off"to draw outside the plot panel - Remove point borders with

stroke = 0 - Multicolored annotated text in ggplot2 by Andrew Whitby & Visuelle Data

- Combine plots using

patchworkorcowplot - Add a (corporate) logo to your plot using

magick - Use animations in your markdown files with the

gganimatepackage and"header-includes: - \usepackage{animate}in your YAML preamble - If you pass a function to the

data-argument in ageom_*, then it applies that function to the data! - Generate distributions in ggplot2 using the

stat_functionfunction. Normal distributions, student t-distributions, beta distributions, anything. See also here.

Fun

- Nonogram puzzles

- Star Wars animation

- Snow animation

- Jingle Bells tune

- Base R Christmas tree plot

- Star Wars tune

- Draw Star Wars Droid BB-8

- Animated personalized motivational GIF

- Animated grey color gradient illusion

- Animated sprites

- Animated fireworks

- Valentine’s day base R heart by Pranav Pandya

- Valentine’s day heart in

ggplot2by David Robinson - Valentine’s day heart v2 in

ggplot2by JonTheGeek - Valentine’s day heart in

gganimate(old API) by David Robinson - Valentine’s day heart in

gganimate(updated API) by Oscar de Léon - Valentine’s day multiple hearts in

gganimate(old API) by David Kretch - Draw a bunny with

install.packages("onion"); require(onion); data(bunny); p3d(bunny,theta=3,phi=104,box=FALSE), via R-bloggers - “Distracted Boyfriend” meme in

ggplot2 - Pixelated Halloween Jack-o-Lantern

- Lightning bolt Harry Potter style

- Random mandelbrot-like visuals (tidyverse-edition without loop)

- Brownian Bridge Animation 1: Minimal Paths

- Brownian Bridge Animation 4: The Shrieking Eels

- Generate generative art with R

- Generate a red sticker

- Generate a lightbulb style thingy

- Generate a weird wing-thingy

- Generate (tweetable) mathematical art

- Generate crochet patterns

- Generate Perlin noise

- Generate Julia Set flowers

- Mathematical art

- Use

ggtexturesto make your ownggplot::geom_*such asggbarf::geom_barf - Call forking GIFS into your RStudio session with

bortels::forkfunction - Coloring image for

object of type closure is not subsettable

Easter eggs

- Run

????"", via Reddit - Run

example(readLine), via DecisionStats - Run

?.Internal, via DecisionStats