Last year witnessed the creation of many novel types of data visualization. Some lesser known ones, jokingly referred to as xenographics, I already discussed.

Two new visualization formats seem to stick around though. And as always, it was not long before someone created special R packages for them. Get ready to meet waffleplots and swarmplots!

Waffleplot

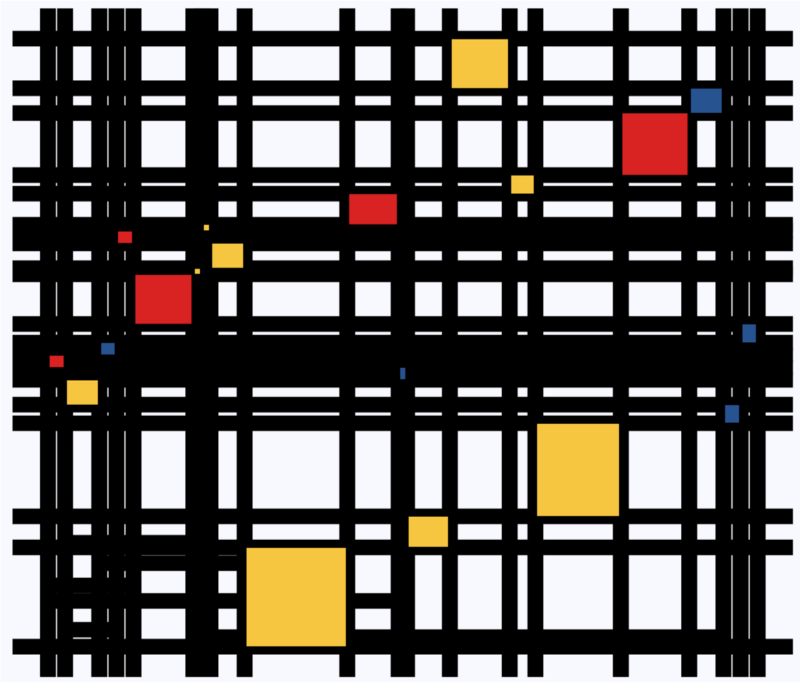

Waffleplots — also called square pie charts — are very useful in communicating parts of a whole for categorical quantities. Bob Rudis (twitter) — scholar and R developer among many other things — did us all a favor and created the R waffle package.

First, we need to install and load the waffle package.

install.packages("waffle") # install waffle package

library(waffle) # load in packageI will use the famous iris data to demonstrate both plots.

Since waffleplots work with frequencies, I will specifically use the iris$Species data stored as a frequency table.

(spec <- table(iris$Species))

setosa versicolor virginica

50 50 50 Now let’s produce our first waffle plot

waffle(spec)

Here, we see every single flower in the iris dataset represented by a tile. This provides an immediate visual representation of the group sizes in the dataset. Looks pretty huh!

But we can play around with the display settings, for instance, let’s change the number of rows and the placement of the legend. Building on ggplot2, the waffle package works very intuitive:

waffle(spec, rows = 3, legend_pos = "bottom")

Or, in case we want to highlight a specific value, we could play around with the colors a bit.

waffle(spec, rows = 15, colors = c("lightgrey", "darkgrey", "red"))

The plot is a bit crowded though with each flower as a seperate tile. We can simply reduce the number of tiles by dividing the values in our frequency table, like so:

# do not forget to annotate what each square represents!

w1 <- waffle(spec / 10, rows = 5, xlab = "1 square = 10 flowers")

w1

Finally, you might want to combine multiple waffles into a single visual. This you can do with the accompanied well-named waffle::iron function. Like so:

iron(

waffle(spec / 5, rows = 5, title = "iron() combines waffles"),

w1

)

I am definately going to use this package in my daily work. I just love the visual simplicity.

As a final remark, the waffle Github page argues that the argument use_glyph can be used to replace the tiles by pictures from the extrafont package, however, I could not get the code to work.

The ggplot2 waffle extension geom_waffle is being developed as we speak, but is not yet hosted on CRAN yet.

Swarmplot

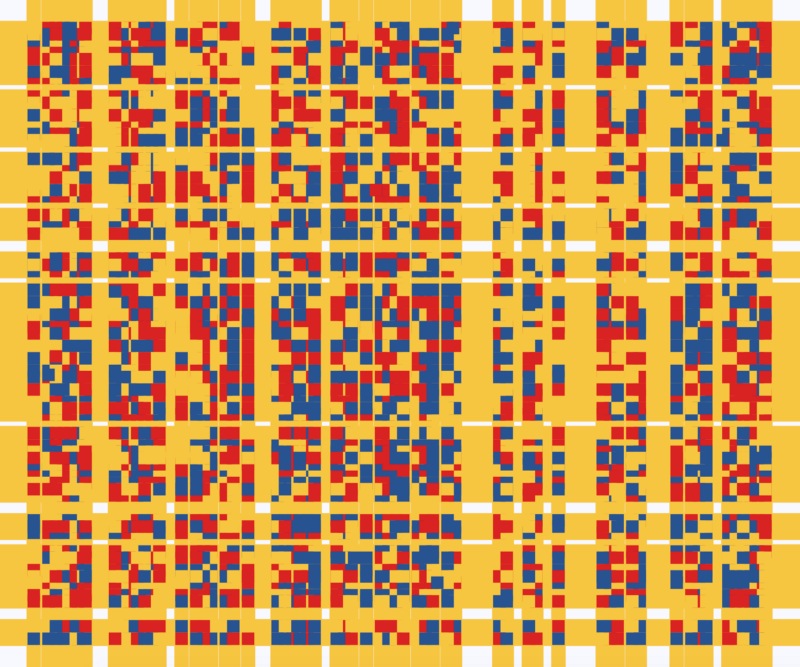

A second innovation comes in the form of the so-called swarmplot, or beeswarmplot, and is already hosted on CRAN in the form of the ggbeeswarm package, developed by Erik Clarke (I think this guy) and Scott Sherril-Mix.

Some examples hosted on the Github page also use the iris dataset, so you can have a look at those. However, I made novel visuals because I prefer theme_light. Hence, I first install the ggbeeswarm package along with ggplot2, and then set the default theme to theme_light.

install.packages("ggplot2")

install.packages("ggbeeswarm")

library(ggplot2)

library(ggbeeswarm)

theme_set(theme_light()) # sets a default ggplot themegeom_beeswarm

There are two main functions in the ggbeeswarm package, geom_beeswarm and geom_quasirandom. Let’s start with the actual beeswarm.

Generating a beeswarm plot is as simple as any other ggplot:

ggplot(iris, aes(Species, Sepal.Length)) + geom_beeswarm()

As this is an “official” ggplot2 extension, most functionality works the same as in any other geom_*. Thus, adding colors or increasing point size is easy:

ggplot(iris, aes(Species, Sepal.Length, col = Species)) + geom_beeswarm(size = 2)

For larger sizes, you might want to adjust the spacing between the points using the cex argument.

ggplot(iris, aes(Species, Sepal.Length, col = Species)) + geom_beeswarm(size = 3, cex = 3)

Points in a beeswarmplot are automatically plotted side-by-side grouped on the X variable, but you can turn that off with the groupOnX command.

ggplot(iris, aes(Species, Sepal.Length, col = Species)) + geom_beeswarm(groupOnX = FALSE)

Finally, if you have another grouping variable besides those on the axis (e.g., a large Sepal.Length below), you might want to consider using the dodge.width argument to seperate the groups.

ggplot(iris, aes(Species, Sepal.Length, col = Sepal.Length > 5)) + geom_beeswarm(dodge.width = 0.5)

geom_quasirandom

The second function in the ggbeeswarm package is geom_quasirandom, an alternative to the original geom_jitter. Basically, it’s a convenient tool to offset points within categories to reduce overplotting.

ggplot(iris, aes(Species, Sepal.Length, col = Species)) + geom_quasirandom()

Instead of the quasirandom offset, the geom allows for many other methods, including a smiley face pattern : )

ggplot(iris, aes(Species, Sepal.Length, col = Species)) + geom_quasirandom(size = 2, method = "smiley")

There is also a earlier package on CRAN, called beeswarm, but it doesn’t seem to be maintained anymore. Moreover, its syntax more or less resembles R’s base::plot, whereas I have a strong preference for ggplot2 personally.